Scenario

- Ambiente oracle RAC

- Database oracle versione 12.1.0.2

- Cluster GRID oracle versione 12.1.0.2

- Sistema Operativo Oracle Linux 7.5

- Storage equallogic con connessione ISCSI, identificato con l’indirizzo IP: 10.20.10.101

Identificazione nuovi dischi

Come utenza root esegui i seguenti passaggi:

- Salva la situazione dei dischi (prima di rendere visibili le nuove LUN al server):

# lsblk > lsblk.before.log- Effettua una login attraverso lo iscsi administrator:

# iscsiadm -m node --loginall all

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-47a6c4904-2a392d3577a5af99-dbtest10gb-2, portal: 10.20.10.101,3260] successful.

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-4796c4904-bdb92d357775af99-dbtest10gb-1, portal: 10.20.10.101,3260] successful.

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-47a6c4904-bea92d3577d5af99-dbtest10gb-3, portal: 10.20.10.101,3260] successful.

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-f146c4904-13992d357a35afe9-dbtest10gb-4, portal: 10.20.10.101,3260] successful.

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-3666c4904-cb892d357ad5c489-dbtest100gb-6, portal: 10.20.10.101,3260] successful.

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-36c6c4904-00092d357b05c489-dbtest100gb-7, portal: 10.20.10.101,3260] successful.

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-1b76c4904-a7b92d357c95d2de-dbtest10gb-5, portal: 10.20.10.101,3260] successful.- Crea una sessione

# iscsiadm -m session

tcp: [1] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-47a6c4904-2a392d3577a5af99-dbtest10gb-2 (non-flash)

tcp: [10] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4786c4904-51892d3576b5af99-dbtest25gb-1 (non-flash)

tcp: [11] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4786c4904-58992d3576e5af99-dbtest25gb-2 (non-flash)

tcp: [12] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4796c4904-de592d357715af99-dbtest25gb-3 (non-flash)

tcp: [13] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4796c4904-21292d357745af99-dbtest25gb-4 (non-flash)

tcp: [14] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-47a6c4904-bea92d3577d5af99-dbtest10gb-3 (non-flash)

tcp: [15] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-36c6c4904-00092d357b05c489-dbtest100gb-7 (non-flash)

tcp: [16] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-1b76c4904-a7b92d357c95d2de-dbtest10gb-5 (non-flash)

tcp: [17] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-1bf6c4904-7e892d357cc5d2de-dbtest10gb-6 (non-flash)

tcp: [2] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4796c4904-bdb92d357775af99-dbtest10gb-1 (non-flash)

tcp: [3] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-f146c4904-13992d357a35afe9-dbtest10gb-4 (non-flash)

tcp: [4] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-3666c4904-cb892d357ad5c489-dbtest100gb-6 (non-flash)

tcp: [5] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-40e6c4904-a8992d3575c5af99-dbtest100gb-1 (non-flash)

tcp: [6] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4776c4904-a5092d3575f5af99-dbtest100gb-2 (non-flash)

tcp: [7] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4776c4904-ef092d357625af99-dbtest100gb-3 (non-flash)

tcp: [8] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4786c4904-52092d357655af99-dbtest100gb-4 (non-flash)

tcp: [9] 10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4786c4904-e2492d357685af99-dbtest100gb-5 (non-flash)- Effettua la ricerca dei nuovi dischi

# iscsiadm -m discovery -t st -p 10.20.10.101

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-47a6c4904-2a392d3577a5af99-dbtest10gb-2

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-4796c4904-bdb92d357775af99-dbtest10gb-1

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-47a6c4904-bea92d3577d5af99-dbtest10gb-3

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-f146c4904-13992d357a35afe9-dbtest10gb-4

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-3666c4904-cb892d357ad5c489-dbtest100gb-6

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-36c6c4904-00092d357b05c489-dbtest100gb-7

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-1b76c4904-a7b92d357c95d2de-dbtest10gb-5

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-1bf6c4904-7e892d357cc5d2de-dbtest10gb-6

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-7a56c4904-99992d357d55d68d-dbtest100gb-8

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-7ad6c4904-05f92d357d85d68d-dbtest100gb-9

10.20.10.101:3260,1 iqn.2001-05.com.equallogic:0-8a0906-7b36c4904-50492d357db5d68d-dbtest100gb-10 - Effettua la login alle nuove LUNs:

# iscsiadm -m node -l -p 10.20.10.101

Logging in to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-7a56c4904-99992d357d55d68d-dbtest100gb-8, portal: 10.20.10.101,3260] (multiple)

Logging in to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-7b36c4904-50492d357db5d68d-dbtest100gb-10, portal: 10.20.10.101,3260] (multiple)

Logging in to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-7ad6c4904-05f92d357d85d68d-dbtest100gb-9, portal: 10.20.10.101,3260] (multiple)

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-7a56c4904-99992d357d55d68d-dbtest100gb-8, portal: 10.20.10.101,3260] successful.

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-7b36c4904-50492d357db5d68d-dbtest100gb-10, portal: 10.20.10.101,3260] successful.

Login to [iface: default, target: iqn.2001-05.com.equallogic:0-8a0906-7ad6c4904-05f92d357d85d68d-dbtest100gb-9, portal: 10.20.10.101,3260] successful.- Salva lo stato dei dischi dopo l’identificazione:

# lsblk > lsblk.after.log - Identifica i nuovi device attraverso il comando “diff” sui files prima e dopo:

# diff lsblk.after.log lsblk.before.log

44,49d43

< sdt 65:48 0 100G 0 disk

< sdu 65:64 0 100G 0 disk

< sdv 65:80 0 100G 0 diskOvviamente se sei in un ambiente RAC effettua la stessa operazione anche sull’altro nodo perchè potresti avere identificativi dei dischi differenti.

Creazione partizioni

A questo punto è necessario creare le partizioni (sempre come utenza root)

- Verifica che sui nuovi devices non ci siano partizioni già esistenti, ad esempio Nessuna partizione:

# ls -l /dev/sdt*

brw-rw----. 1 root disk 65, 0 Jan 24 08:20 /dev/sdtPartizione esistente:

# ls -l /dev/sdn*

brw-rw----. 1 root disk 8, 208 May 22 2018 /dev/sdn

brw-rw----. 1 oracle asmadmin 8, 209 Jan 24 10:17 /dev/sdn1- Usa il commando fdisk per partizionare i dischi identificati precedentemente:

# fdisk /dev/sdt

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won’t be recoverable.

The number of cylinders for this disk is set to 1305.

There is nothing wrong with that, but this is larger than 1024, and could in certain setups cause problems with:

software that runs at boot time (e.g., old versions of LILO)

booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

Command (m for help): n

Command action

e extended

p primary partition (1-4) p

Partition number (1-4): 1

First cylinder (1-13054, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-13054, default 13054): Using default value 13054

Command (m for help): p

Disk /dev/sdt: 107.3 GB, 107374182400 bytes

255 heads, 63 sectors/track, 13054 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdt1 1 13054 94847760 83 Linux

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

#In ogni caso la sequenza delle risposte è: “n”, “p”, “1”, “Return”, “Return”, “p” and “w”.

Quindi la strada più veloce è la seguente:

# echo -e "n\np\n1\n\n\nw"|fdisk /dev/sdt

# echo -e "n\np\n1\n\n\nw"|fdisk /dev/sdu

# echo -e "n\np\n1\n\n\nw"|fdisk /dev/sdviSCSI Configuration

È importante che tu tenga presente che non è possibile in caso di ISCSI utilizzare l’identificatore “/dev/sdX” per effettuare la connessione in quanto potrebbe cambiare dinamicamente ad ogni riavvio del server. Di conseguenza dovrai stabilire la connessione attraverso altre strade (per esempio /dev/oracleasm/disk1), vediamo di capire come.

- Identifica lo scsi ID’s per i nuovi devices (supponendo che la connessione ISCSI sia rimasta aperta):

# lsscsi -i|sort -k6

[0:0:32:0] enclosu DP BACKPLANE 1.05 – –

[0:2:0:0] disk DELL PERC 6/i 1.11 /dev/sda 36001e4f0317b04002530587a0b0575df

[4:0:0:0] disk Dell Virtual Floppy 123 /dev/sdb Dell_Virtual_Floppy_1028_123456-0:0

[3:0:0:0] cd/dvd Dell Virtual CDROM 123 /dev/sr1 Dell_Virtual_CDROM_1028_123456-0:0

[1:0:0:0] cd/dvd TSSTcorp CDRWDVD TSL462D DE07 /dev/sr0 –

[5:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdc 36090a04890c4764799aff575352d09a5

[6:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdd 36090a04890c4764799af2576352d09ef

[7:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sde 36090a04890c4864799af5576352d0952

[8:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdf 36090a04890c4864799af8576352d49e2

[9:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdg 36090a04890c4864799afb576352d8951

[10:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdh 36090a04890c4864799afe576352d9958

[11:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdi 36090a04890c4964799af1577352d59de

[12:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdj 36090a04890c4964799af4577352d2921

[13:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdk 36090a04890c4a64799afa577352d392a

[14:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdl 36090a04890c4964799af7577352db9bd

[15:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdm 36090a04890c4a64799afd577352da9be

[16:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdn 36090a04890c446f1e9af357a352d9913

[17:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdo 36090a04890c4663689c4d57a352d89cb

[18:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdp 36090a04890c4c63689c4057b352d0900

[19:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdq 36090a04890c4761bded2957c352db9a7

[20:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdr 36090a04890c4f61bded2c57c352d897e

[21:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sds 36090a04890c4567a8dd6557d352d9999

[22:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdt 36090a04890c4d67a8dd6857d352df905

[23:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdu 36090a04890c4367b8dd6b57d352d4950

[24:0:0:0] disk EQLOGIC 100E-00 4.3 /dev/sdv 36090a04890c4e64099afc575352d99a8- Verifica la corretta dimensione:

# fdisk -l|grep "Disk /dev/sd"|grep -v "sdb"|grep -v "sdc"|sort -k2

Disk /dev/sda: 299.4 GB, 299439751168 bytes, 584843264 sectors

Disk /dev/sdd: 107.4 GB, 107379425280 bytes, 209725440 sectors

Disk /dev/sde: 107.4 GB, 107379425280 bytes, 209725440 sectors

Disk /dev/sdf: 107.4 GB, 107379425280 bytes, 209725440 sectors

Disk /dev/sdg: 26.8 GB, 26848788480 bytes, 52439040 sectors

Disk /dev/sdh: 26.8 GB, 26848788480 bytes, 52439040 sectors

Disk /dev/sdi: 26.8 GB, 26848788480 bytes, 52439040 sectors

Disk /dev/sdj: 26.8 GB, 26848788480 bytes, 52439040 sectors

Disk /dev/sdk: 10.7 GB, 10742661120 bytes, 20981760 sectors

Disk /dev/sdl: 10.7 GB, 10742661120 bytes, 20981760 sectors

Disk /dev/sdm: 10.7 GB, 10742661120 bytes, 20981760 sectors

Disk /dev/sdn: 10.7 GB, 10742661120 bytes, 20981760 sectors

Disk /dev/sdo: 107.4 GB, 107379425280 bytes, 209725440 sectors

Disk /dev/sdp: 107.4 GB, 107379425280 bytes, 209725440 sectors

Disk /dev/sdq: 10.7 GB, 10742661120 bytes, 20981760 sectors

Disk /dev/sdr: 10.7 GB, 10742661120 bytes, 20981760 sectors

Disk /dev/sds: 107.4 GB, 107379425280 bytes, 209725440 sectors

Disk /dev/sdt: 107.4 GB, 107379425280 bytes, 209725440 sectors

Disk /dev/sdu: 107.4 GB, 107379425280 bytes, 209725440 sectors

Disk /dev/sdv: 107.4 GB, 107379425280 bytes, 209725440 sectors- Scrivi le regole “udev” per creare un link simbolico in modo che possa essere utilizzato al riavvio del server:

1) Effettua un backup del file di configurazione

cp -p /etc/udev/rules.d/99-oracle-asmdevices.rules /home/oracle/asmdevices.rules/99-oracle-asmdevices.rules.20190717 2) Aggiungi al file le righe relative ai dischi assegnando a ciascuna un identificativo univoco (in questo esempio asm-data08, asm-data09, asm-data10):

# cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4a64799afa577352d392a”, SYMLINK+=”asm-cluster01″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4964799af4577352d2921″, SYMLINK+=”asm-flash01″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4864799af8576352d49e2″, SYMLINK+=”asm-data01″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4864799afb576352d8951″, SYMLINK+=”asm-flash02″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4a64799afd577352da9be”, SYMLINK+=”asm-cluster02″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4864799afe576352d9958″, SYMLINK+=”asm-flash03″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4864799af5576352d0952″, SYMLINK+=”asm-data02″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4764799af2576352d09ef”, SYMLINK+=”asm-data03″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4764799aff575352d09a5″, SYMLINK+=”asm-data04″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4567a8dd6557d352d9999″, SYMLINK+=”asm-data05″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4964799af7577352db9bd”, SYMLINK+=”asm-cluster03″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4964799af1577352d59de”, SYMLINK+=”asm-flash04″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c446f1e9af357a352d9913″, SYMLINK+=”asm-cluster04″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4663689c4d57a352d89cb”, SYMLINK+=”asm-data06″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4c63689c4057b352d0900″, SYMLINK+=”asm-data07″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4761bded2957c352db9a7″, SYMLINK+=”asm-cluster05″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4f61bded2c57c352d897e”, SYMLINK+=”asm-cluster06″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4d67a8dd6857d352df905″, SYMLINK+=”asm-data08″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4367b8dd6b57d352d4950″, SYMLINK+=”asm-data09″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd?1″, SUBSYSTEM==”block”, PROGRAM==”/usr/lib/udev/scsi_id -g -u -d /dev/$parent”, RESULT==”36090a04890c4e64099afc575352d99a8″, SYMLINK+=”asm-data10″, OWNER=”oracle”, GROUP=”asmadmin”, MODE=”0660″- Effettua il refresh dello udev

udevadm trigger o in alternativa

udevadm control --reload-rules - Verifica la lista dei dischi ASM disponibili dopo l’aggiunta:

# ls -l /dev/asm*

lrwxrwxrwx. 1 root root 4 Mar 11 17:54 /dev/asm-cluster01 -> sdk1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-cluster02 -> sdm1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-cluster03 -> sdl1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-cluster04 -> sdn1

lrwxrwxrwx. 1 root root 4 Mar 11 17:54 /dev/asm-cluster05 -> sdq1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-cluster06 -> sdr1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-data01 -> sdf1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-data02 -> sde1

lrwxrwxrwx. 1 root root 4 Mar 11 17:54 /dev/asm-data03 -> sdd1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-data04 -> sdc1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-data05 -> sds1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-data06 -> sdo1

lrwxrwxrwx. 1 root root 4 Mar 11 17:53 /dev/asm-data07 -> sdp1

lrwxrwxrwx. 1 root root 4 Mar 11 17:53 /dev/asm-data08 -> sdt1

lrwxrwxrwx. 1 root root 4 Mar 11 17:54 /dev/asm-data09 -> sdu1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-data10 -> sdv1

lrwxrwxrwx. 1 root root 4 Mar 11 17:53 /dev/asm-flash01 -> sdj1

lrwxrwxrwx. 1 root root 4 Mar 11 17:53 /dev/asm-flash02 -> sdg1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-flash03 -> sdh1

lrwxrwxrwx. 1 root root 4 Mar 11 17:55 /dev/asm-flash04 -> sdi1- È importante aggiungere al file /etc/scsi_id.config la seguente riga per configurare gli SCSI devices come trusted. Creare il file se non esiste:

options=-g - Se possibile effettua un reboot per verificare il coretto mapping

Anche in questo caso se sei in un ambiente RAC effettua gli step da 1 a 7 sull’altro nodo.

Aggiunta dischi ASM

A questo punto con l’utenza oracle (owner della componente grid del database) è arrivato il momento di aggiungere i dischi ai disk group.

- Verifica lo spazio totale allocato:

$ asmcmd

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 61464 23816 0 23816 0 Y DG_CLUSTER/

MOUNTED EXTERN N 512 512 4096 4194304 716828 78152 0 78152 0 N DG_DATA/

MOUNTED EXTERN N 512 512 4096 4194304 102416 98904 0 98904 0 N DG_FLASH/- Verifica i nuovi dischi:

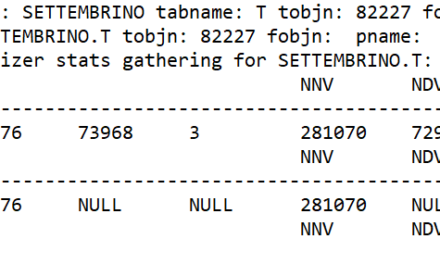

$ sqlplus / as sysasm

set lin 200

col DISK_GROUP_NAME FOR A30

col DISK_FILE_PATH FOR A30

col DISK_FILE_NAME FOR A30

col DISK_FILE_FAIL_GROUP FOR A30

SELECT NVL(a.name, ‘[CANDIDATE]’) disk_group_name, b.path disk_file_path,

b.name disk_file_name, b.failgroup disk_file_fail_group

FROM v$asm_diskgroup a RIGHT OUTER JOIN v$asm_disk b USING (group_number)

ORDER BY a.name, b.path;

DISK_GROUP_NAME DISK_FILE_PATH DISK_FILE_NAME DISK_FILE_FAIL_GROUP

—————————— —————————— —————————— —————

DG_CLUSTER /dev/asm-cluster01 DG_CLUSTER_0000 DG_CLUSTER_0000

DG_CLUSTER /dev/asm-cluster02 DG_CLUSTER_0001 DG_CLUSTER_0001

DG_CLUSTER /dev/asm-cluster03 DG_CLUSTER_0002 DG_CLUSTER_0002

DG_CLUSTER /dev/asm-cluster04 DG_CLUSTER_0003 DG_CLUSTER_0003

DG_DATA /dev/asm-data01 DG_DATA_0000 DG_DATA_0000

DG_DATA /dev/asm-data02 DG_DATA_0001 DG_DATA_0001

DG_DATA /dev/asm-data03 DG_DATA_0002 DG_DATA_0002

DG_DATA /dev/asm-data04 DG_DATA_0003 DG_DATA_0003

DG_DATA /dev/asm-data05 DG_DATA_0004 DG_DATA_0004

DG_DATA /dev/asm-data06 DG_DATA_0005 DG_DATA_0005

DG_DATA /dev/asm-data07 DG_DATA_0006 DG_DATA_0006

DG_FLASH /dev/asm-flash01 DG_FLASH_0000 DG_FLASH_0000

DG_FLASH /dev/asm-flash02 DG_FLASH_0001 DG_FLASH_0001

DG_FLASH /dev/asm-flash03 DG_FLASH_0002 DG_FLASH_0002

DG_FLASH /dev/asm-flash04 DG_FLASH_0003 DG_FLASH_0003

[CANDIDATE] /dev/asm-data08

[CANDIDATE] /dev/asm-data09

[CANDIDATE] /dev/asm-data10- Aggiungi i dischi:

alter diskgroup DG_DATA add disk '/dev/asm-data08';

alter diskgroup DG_DATA add disk '/dev/asm-data09';

alter diskgroup DG_DATA add disk '/dev/asm-data10';- Verifica la situazione

$ sqlplus / as sysasm

set lin 300

col FAILGROUP for a14

col PATH for a20

col name for a16

col MOUNT_STATUS for a12

col HEADER_STATUS for a20

col MODE_STATUS for a10

select

NAME, HEADER_STATUS, STATE, MOUNT_DATE, PATH, round(TOTAL_MB/1024) TotalGB,

round(FREE_MB/1024) FreeGB from v$asm_disk order by name, path;

NAME HEADER_STATUS STATE MOUNT_DATE PATH TOTALGB FREEGB

—————- ——————– ———————— ——————- ——————– ———- ———-

DG_CLUSTER_0000 MEMBER NORMAL 23.05.2018 11:44:40 /dev/asm-cluster01 10 2

DG_CLUSTER_0001 MEMBER NORMAL 23.05.2018 11:44:40 /dev/asm-cluster02 10 2

DG_CLUSTER_0002 MEMBER NORMAL 23.05.2018 11:44:40 /dev/asm-cluster03 10 2

DG_CLUSTER_0003 MEMBER NORMAL 23.05.2018 11:44:40 /dev/asm-cluster04 10 2

DG_DATA_0000 MEMBER NORMAL 23.05.2018 13:40:53 /dev/asm-data01 100 11

DG_DATA_0001 MEMBER NORMAL 23.05.2018 13:40:53 /dev/asm-data02 100 11

DG_DATA_0002 MEMBER NORMAL 23.05.2018 13:40:53 /dev/asm-data03 100 11

DG_DATA_0003 MEMBER NORMAL 23.05.2018 13:40:53 /dev/asm-data04 100 11

DG_DATA_0004 MEMBER NORMAL 23.05.2018 13:40:53 /dev/asm-data05 100 11

DG_DATA_0005 MEMBER NORMAL 25.01.2019 11:24:57 /dev/asm-data06 100 11

DG_DATA_0006 MEMBER NORMAL 25.01.2019 11:24:57 /dev/asm-data07 100 11

DG_FLASH_0000 MEMBER NORMAL 23.05.2018 13:42:32 /dev/asm-flash01 25 24

DG_FLASH_0001 MEMBER NORMAL 23.05.2018 13:42:32 /dev/asm-flash02 25 24

DG_FLASH_0002 MEMBER NORMAL 23.05.2018 13:42:32 /dev/asm-flash03 25 24

DG_FLASH_0003 MEMBER NORMAL 23.05.2018 13:42:32 /dev/asm-flash04 25 24

DG_DATA_0008 MEMBER NORMAL 23.05.2018 13:40:53 /dev/asm-data08 100 11

DG_DATA_0009 MEMBER NORMAL 25.01.2019 11:24:57 /dev/asm-data09 100 11

DG_DATA_0010 MEMBER NORMAL 25.01.2019 11:24:57 /dev/asm-data10 100 11- Verifica il progresso:

set lin 300col PASS for a10col state for a8col power for a5col power for 999col ERROR_CODE for a10

select * from v$asm_operation;

GROUP_NUMBER OPERATION PASS STATE POWER ACTUAL SOFAR EST_WORK EST_RATE EST_MINUTES ERROR_CODE CON_ID

———— ————— ———- ——– —– ———- ———- ———- ———- ———– ——- ——-

1 REBAL COMPACT WAIT 1 1 0 0 0 0 0

1 REBAL REBALANCE RUN 1 1 1938 2096 28885 0 0

1 REBAL REBUILD DONE 1 1 0 0 0 0 0- Eventualmente aumenta la rapidità del processo:

SQL> alter diskgroup DG_CLUSTER rebalance power 6;

Diskgroup altered- Verifica lo spazio totale allocato:

$ asmcmd

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 61464 23816 0 23816 0 Y DG_CLUSTER/

MOUNTED EXTERN N 512 512 4096 4194304 1024040 385364 0 78152 0 N DG_DATA/

MOUNTED EXTERN N 512 512 4096 4194304 102416 98904 0 98904 0 N DG_FLASH/

Il disk group DG_DATA adesso contiene lo spazio aggiuntivo dei 3 nuovi dischi.

Commenti recenti